无人车实时优化-文件IO对程序影响

-

date_range 09/04/2019 info

背景

流向

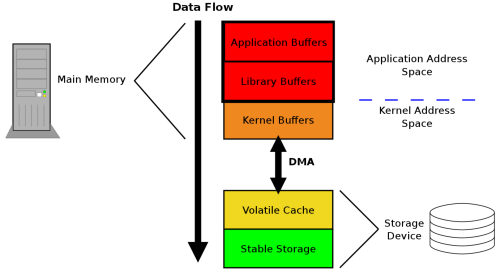

要理解Linux下文件IO对程序的影响, 首先从数据流向来理解. 如下图是Linux下的

userspace

用户层

fwrite: application –> library bufferfflush: library buffer –> kernel buffer

fwrite会在buffer到达libfrary buffer max大小的时候, 触发一次flsuh.

kernel

kernel space的文件存储框架比较复杂, 有兴趣可以看看下面这个图.

PS: top上看到的buff/cache就是这里的page cache.

强制刷新kernel buffer的用户层函数是:

- fsync: kernel buffer –> dma -> device

时序

下图是默认使用write/read ioctl下的app/kernel时序

测试

下面做一些实验.

读写开销

主要关注的是写开销(读开销涉及到具体的disk io, 不用测了, 必然是大毫秒级别的延迟)

如下是测试程序

#include <chrono>

#include <fcntl.h>

#include <iostream>

#include <stdio.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <string.h>

#include <unistd.h>

#include <unistd.h>

#include <ros/ros.h>

int main(int argc, char **argv) {

FILE *stream;

/* create a file */

/* fwrite */

for (uint32_t size = 10; size < 1000 * 10; size *= 10) {

double avg = 0;

double max = 0;

stream = fopen("test.txt", "w");

for (int i = 0; i < 1024 * 10; i++) {

uint8_t *buffer = new uint8_t(size);

std::chrono::steady_clock::time_point begin =

std::chrono::steady_clock::now();

/* write some data to the file */

fwrite(buffer, size, 1, stream);

std::chrono::steady_clock::time_point end =

std::chrono::steady_clock::now();

float diff = std::chrono::duration<float>(end - begin).count() * 1000;

if (diff > max)

max = diff;

avg += diff;

free(buffer);

// fflush(stream);

}

std::cerr << "FWRITE: Avg = " << avg / 10240 << " Max = " << max

<< " Szie = " << size << std::endl;

fclose(stream);

usleep(1000 * 1000);

}

usleep(1000 * 1000);

/* fwrite + fflush */

for (uint32_t size = 10; size < 1000 * 10; size *= 10) {

double avg = 0;

double max = 0;

stream = fopen("test.txt", "w");

for (int i = 0; i < 1024 * 10; i++) {

uint8_t *buffer = new uint8_t(size);

std::chrono::steady_clock::time_point begin =

std::chrono::steady_clock::now();

/* write some data to the file */

fwrite(buffer, size, 1, stream);

fflush(stream);

std::chrono::steady_clock::time_point end =

std::chrono::steady_clock::now();

float diff = std::chrono::duration<float>(end - begin).count() * 1000;

if (diff > max)

max = diff;

avg += diff;

free(buffer);

}

std::cerr << "FWRITE+FFLUSH: Avg = " << avg / 10240 << " Max = " << max

<< " Szie = " << size << std::endl;

fclose(stream);

usleep(1000 * 1000);

}

/* sysio */

for (uint32_t size = 10; size < 1000 * 10; size *= 10) {

double avg = 0;

double max = 0;

int fd = open("test.txt", O_RDWR | O_CREAT | O_TRUNC, S_IRUSR | S_IWUSR);

for (int i = 0; i < 1024 * 10; i++) {

uint8_t *buffer = new uint8_t(size);

std::chrono::steady_clock::time_point begin =

std::chrono::steady_clock::now();

write(fd, buffer, size);

std::chrono::steady_clock::time_point end =

std::chrono::steady_clock::now();

float diff = std::chrono::duration<float>(end - begin).count() * 1000;

if (diff > max)

max = diff;

avg += diff;

free(buffer);

// fsync(fd);

// fflush(stream);

}

std::cerr << "WRITE: Avg = " << avg / 10240 << " Max = " << max

<< " Szie = " << size << std::endl;

close(fd);

usleep(1000 * 1000);

}

/* sysio */

for (uint32_t size = 10; size < 1000 * 10; size *= 10) {

double avg = 0;

double max = 0;

int fd = open("test.txt", O_RDWR | O_CREAT | O_TRUNC, S_IRUSR | S_IWUSR);

for (int i = 0; i < 1024; i++) {

uint8_t *buffer = new uint8_t(size);

std::chrono::steady_clock::time_point begin =

std::chrono::steady_clock::now();

write(fd, buffer, size);

fsync(fd);

std::chrono::steady_clock::time_point end =

std::chrono::steady_clock::now();

float diff = std::chrono::duration<float>(end - begin).count() * 1000;

if (diff > max)

max = diff;

avg += diff;

free(buffer);

}

std::cerr << "WRITE+FSYNC: Avg = " << avg / 1024 << " Max = " << max

<< " Szie = " << size << std::endl;

close(fd);

usleep(1000 * 1000);

}

/* ROSINFO */

{

ros::init(argc, argv, "logtest");

double avg = 0;

double max = 0;

for (int i = 0; i < 1024 * 1000; i++) {

std::chrono::steady_clock::time_point begin =

std::chrono::steady_clock::now();

ROS_WARN("HELLO_WORLD!");

std::chrono::steady_clock::time_point end =

std::chrono::steady_clock::now();

float diff = std::chrono::duration<float>(end - begin).count() * 1000;

if (diff > max)

max = diff;

avg += diff;

}

std::cerr << "ROS_INFO: Avg = " << avg / 1024000 << " Max = " << max

<< std::endl;

}

return 0;

}

编译命令

g++ test.cpp -std=c++11 -I /opt/ros/kinetic/include -L /opt/ros/kinetic/lib/ -lroscpp -lrosconsole

运行时候开启ros日志的fileappender.

运行命令:

./a.out >/dev/null

测试结果

FWRITE: Avg = 0.000591312 Max = 5.52133 Szie = 10

FWRITE: Avg = 0.000166351 Max = 0.388972 Szie = 100

FWRITE: Avg = 0.00145694 Max = 1.02567 Szie = 1000

FWRITE+FFLUSH: Avg = 0.00626838 Max = 4.74133 Szie = 10

FWRITE+FFLUSH: Avg = 0.0081963 Max = 18.0153 Szie = 100

FWRITE+FFLUSH: Avg = 0.00675015 Max = 2.70021 Szie = 1000

WRITE: Avg = 0.00874859 Max = 14.97 Szie = 10

WRITE: Avg = 0.00862502 Max = 15.8307 Szie = 100

WRITE: Avg = 0.00886952 Max = 15.4857 Szie = 1000

WRITE+FSYNC: Avg = 7.78275 Max = 46.7373 Szie = 10

WRITE+FSYNC: Avg = 7.35126 Max = 28.802 Szie = 100

WRITE+FSYNC: Avg = 7.51313 Max = 17.2564 Szie = 1000

ROS_INFO(fileappender): Avg = 0.00812843 Max = 66.2458

ROS_INFO(normal): Avg = 0.00317588 Max = 61.1018

内核写回开销

最终的写回操作是由kernel space的flusher thread完成.

pages写回到磁盘,有这三种情况会触发:

- 可用内存少于一个阀值,write back了dirty pages才可能释放这些cache

-

可以通过sysctl -a grep vm.dirty_background_ratio 来看, 默认是10%

-

- sync() & fsync() 等系统调用。

- 脏数据老于一个阀值, 避免脏数据一直在内存

- 可以通过/proc/sys/vm/dirty_expire_centisecs来看, 默认是30s

- 还有一个参数是 vm.dirty_writeback_centisecs, 默认是5s, 这个是writeback线程的唤醒间隔

下面命令还可以看当前dirty page的情况

date && cat /proc/vmstat | grep -E "dirty|writeback"

测试上直接用下面命令写磁盘

sudo dd if=/dev/zero of=./test conv=fsync

通过top可以看到, 5秒后会出现了一个kworker, cpu占用率比较高

13755 root 20 0 6048 664 600 R 89.4 0.0 0:19.75 dd

12835 root 20 0 0 0 0 D 13.2 0.0 0:01.39 kworker/u32:3

结论

读写调用

- 不涉及系统调用的write开销: us-

- write的系统调用: us+

- 同步写磁盘: 10ms+

-

默认write操作会有延迟到10ms+的情况, 是因为触发同步了?

- 使用第三方日志库的时候, 一定要注意背后同步写文件操作.

- 比如说ROS中启用FILEAPPNDER记录日志

- 测试中发现, ROS打印日志在不写文件的时候, 也有60ms+的延迟… 应该藏着什么坑

写回

- 写回线程的priority是默认值, 和其他程序会cfs分享cpu.

- 300mb/s的磁盘写入速度下, 测试x86机子上的cpu开销在13.2%